This summer, I had the opportunity to test YourText.guru and Cocon.se, both tools are known and used to optimize your website(s).

In order to help people who do R and SEO, I created a first R package that allows you to easily manipulate their API.

I begin by introducing YourText.guru and the R open-source package created especially for the occasion : https://github.com/voltek62/writingAssistantR

If you prefer Python, Julien Deneuville created this version: https://gitlab.com/databulle/python_ytg

YourText.guru

YourTextGuru is a service that provides Guides to help you write, compared to a query, with the avowed purpose of saving you time.

These Guides are generated using two algorithms :

- Imitation of the processing made by a search engine

- Retrieval of texts related to the subject and determination of the most important key expressions.

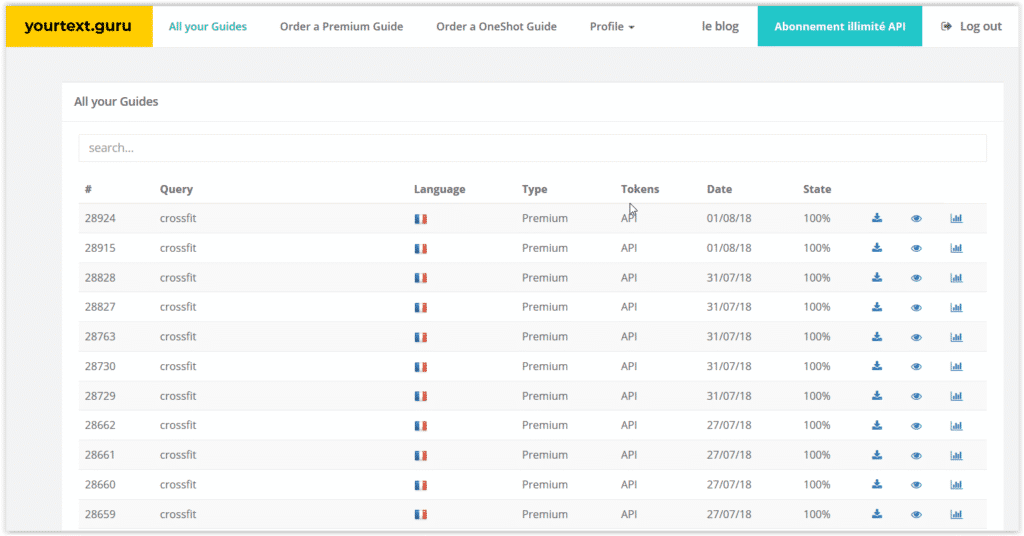

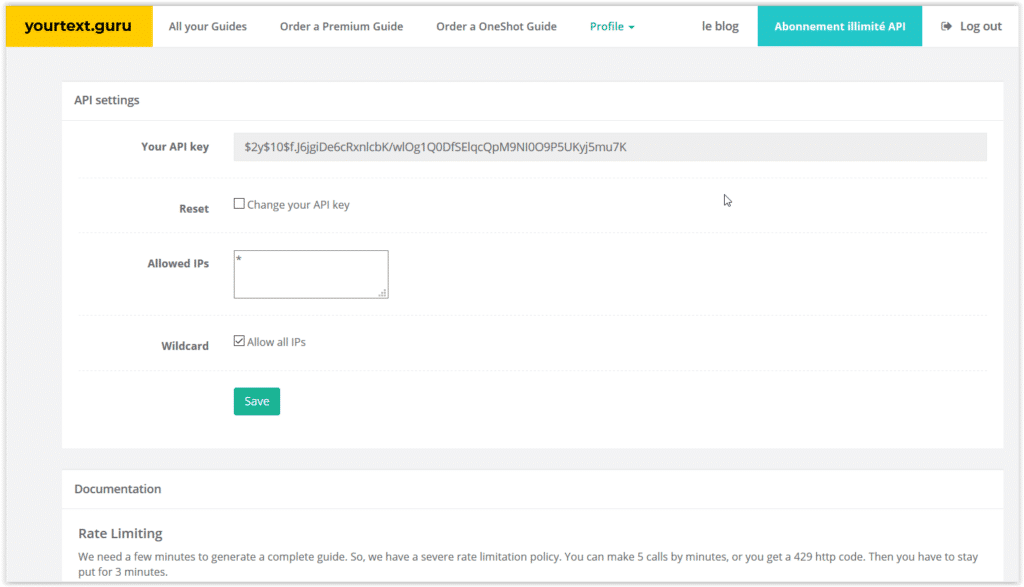

Get your token

You need to have access to the API, i.e. 100€/month at the time of writing this article.

Just go to this page to know your API key: https://yourtext.guru/profil/api

This key must be copied to the root of your project in a text file: ytg_configuration.txt

token=VOTRECLEAPI debug=FALSE

Install my R package

You must have Rstudio and be trained in the R basics. I invite you to write these lines in the R console.

library(devtools)

install_github("voltek62/writingAssistantR")

In order to initialize the package, you just have to use the following code, be careful the initAPI function will read your token in your text file “ytg_configuration.txt”

The getStatus function simply checks that the connection is correct.

library(RCurl) library(rjson) library(XML) initAPI() status <- getStatus()

Create a Guide

If you want to create a Guide, it is very simple, you must specify your request, the language ( here: en_us, en_gb) and the Guide mode you want:

– The Premium Guide is designed to provide a list of all essential and important words related to a query.

– The Oneshot Guide is designed to provide a template for writing a text of approximately 300 words.

I put a loop which waits for the end of the process, it can take a few minutes.

guide <- createGuide("crossfit","en_us","premium")

guide_id <- guide$guide_id

while(getGuide(guide_id)=="error") {

print("Your guide is currently being created.")

Sys.sleep(40)

}

print("Your guide is ready")

guide.all <- getGuide(guide_id)

Get your scores for an URL

It’s very simple with the R package, you just have to give your URL and the Guide ID previously created.

url <- "http://www.wodnews.com" scores <- checkGuide(guide_id, url)

You will get two scores:

– soseo : the general score of the submitted text in terms of optimization (100% corresponds to a text-optimized to the maximum of the normal range).

– dseo: the SEO risk score. This percentage can in some extreme cases exceed 100%.

How to get the main text

I tested several tricks to get directly the main text of the page. The best method is to use XPath with the following request.

//text()[not(ancestor::select)][not(ancestor::script)][not(ancestor::style)][not(ancestor::noscript)][not(ancestor::form)][string-length(.) > 10]

If you wish to go further on this subject, I invite you to read :

– https://moz.com/devblog/benchmarking-python-content-extraction-algorithms-dragnet-readability-goose-and-eatiht/

– https://boilerpipe-web.appspot.com/

# download html html <- getURL(url, followlocation = TRUE) # parse html doc = htmlParse(html, asText=TRUE) plain.text <- xpathSApply(doc, "//text()[not(ancestor::select)][not(ancestor::script)][not(ancestor::style)][not(ancestor::noscript)][not(ancestor::form)][string-length(.) > 10]", xmlValue) txt <- paste(plain.text, collapse = " ")

Get your scores for many URLs

I give you a method with Rvest to get the first 100 results on Google but there are thousands of methods.

library(rvest)

library(httr)

library(stringr)

library(dplyr)

query <- URLencode("crossfit france")

page <- paste("https://www.google.fr/search?num=100&espv=2&btnG=Rechercher&q=",query,"&start=0", sep = "")

webpage <- read_html(page)

googleTitle <- html_nodes(webpage, "h3 a")

googleTitleText <- html_text(googleTitle)

hrefgoogleTitleLink <- html_attr(googleTitle, "href")

googleTitleLink <- str_replace_all(hrefgoogleTitleLink, "/url\\?q=|&sa=(.*)","")

Now, what will be interesting is to get the scores for each URL.

library(dplyr)

DF <- data.frame(Title=googleTitleText, Link=googleTitleLink, score=0, danger = 0, stringsAsFactors = FALSE) %>%

filter(grepl("http",Link))

for (i in 1:nrow(DF)) {

link <- DF[i,]$Link

scores <- checkGuide(guide_id,link)

if (scores!="error") {

DF[i,]$score <- scores$score

DF[i,]$danger <- scores$danger

}

finally = Sys.sleep(60)

}

You will get a table with a score for each URL.

Conclusion

Here, I hope this will be useful for you to analyze your content and the content of competitors.

I think that if you need to create content, it is important to use a writing assistant like yourtext.guru as it is designed for the needs of SEOs, writers and web marketers.

For the end of August, I am going to write the following article: How to use Cocon.se with R?

Leave a Reply