Google search rankings are clearly a strategic matter. 91% of clicks on the world’s most famous search engine are made on the first page of results. But how can you be sure of appearing in the top 10? Which SEO rules should you go by to get guaranteed good results? After all, the tech giant’s algorithm is as mysterious as it is dynamic and ever-changing.

SEO has long been considered magic. In each case, there are at least 500 possible strategies. The challenge is to quickly figure out which strategy will guarantee that you’ll appear on that coveted first page of results. That was what sparked the idea of building a model capable of predicting which pages would rank among the top 10 Google results for specific searches.

So how can you be 92% sure that a URL will make the top 10 Google searches for a given topic – in just a few minutes? Well, OVH has developed an open-source SEO data tool to support the growth of both the Group and its customers. That tool is called the OVH Ranking Predictive Tool.

Ultra-reliable search ranking prediction

When I first joined OVH, more than two years ago, I met Rémi Bacha, a Data Scientist who has been at OVH since 2012 and is passionate about SEO. The meeting was extremely fruitful. We quickly established that in order to respond to OVH’s hyper-growth – while taking all the languages and brands, and 60 websites we cover into account – we needed a tool to automate the process of finding the best strategy, to ensure the strongest possible organic search potential.

The combined approach Rémi and I subsequently developed formed the basis of the Ranking Predictive Tool. Rather than relying on intuition or testing each of the 500 strategies manually, the idea is to leverage machine learning to get the most reliable predictions possible. As Rémi put it:

“It is impossible to achieve 100% reliability because of external factors like news items, a competitor appearing on the scene or a penalty from Google. But with a model that is 92% effective on average, we are pretty close.”

Figuring out the best SEO strategy in minutes

Used for more than 90% of web searches in France, Google is based on an algorithm – Google RankBrain – boosted by artificial intelligence. The ranking of a search result depends on many factors and variables, such as the field of activity. Different users have different priorities. For one user, page load time could be the most important factor. For another, it could be the terms used.

The Ranking Predictive Tool therefore makes use of different tools throughout several stages to estimate all these variables and figure out the best possible strategy. First, Ranxplorer is used to retrieve the top 100 keywords related to a particular topic. Each keyword is associated with the URL of the page concerned. Next, tools such as Visiblis or Majestic can be used to draw up a list of ranking factors. The machine learning element then comes into play, forming “random forest” decision trees and adjusting the correct variables to define the most appropriate final strategy.

Streamlining the process with Dataiku

In the early days of the Ranking Predictive Tool project, I created an open-source project (OVH GitHub) and shared the source code in R, but unfortunately, it couldn’t be used without R language knowledge. Eventually, I found a solution to streamline the process of using predication algorithms using a platform named “Dataiku” – an open-source data science platform. Let’s take a closer look at how this tool helped streamline our process for generating accurate ranking predications…

The following example describes how to use XgBoost (although the same process could be used with various other algorithms) with a dataset of 200,000 records, including 2,000 distinct keywords/search terms.

Step 1: Install Dataiku

First, you need to install Dataiku on your platform. Just follow this tutorial to do so: https://www.dataiku.com/dss/trynow/

For this example, I used Dataiku Free Edition for Virtualbox, but whatever version you opt for, your data stays in your infrastructure, and there is no limitation in terms of the volume of data processed. Once it’s installed, you can log in straight away.

Step 2: Create your project

You just need to choose a name. Once you’ve done so, you’re ready to import your files. Click on your project link and click on “Import Dataset.”

Great, you are ready to import files. Click on your project link and Click on “Import Dataset.”

Step 3: Prepare a new dataset

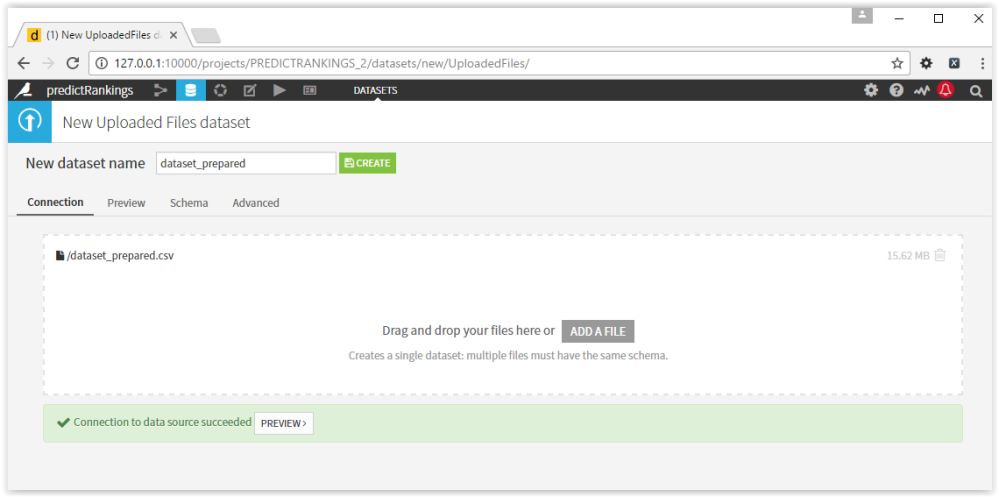

Download my prepared dataset (https://github.com/ovh/summit2016-RankingPredict/raw/master/dataset/dataset_prepared.csv) and click on ‘Upload Your Files’ to create your first dataset.

Click on “Finish”, and choose a name for your dataset (dataset_garden_queries, for example).

Step 4: Create your first analysis

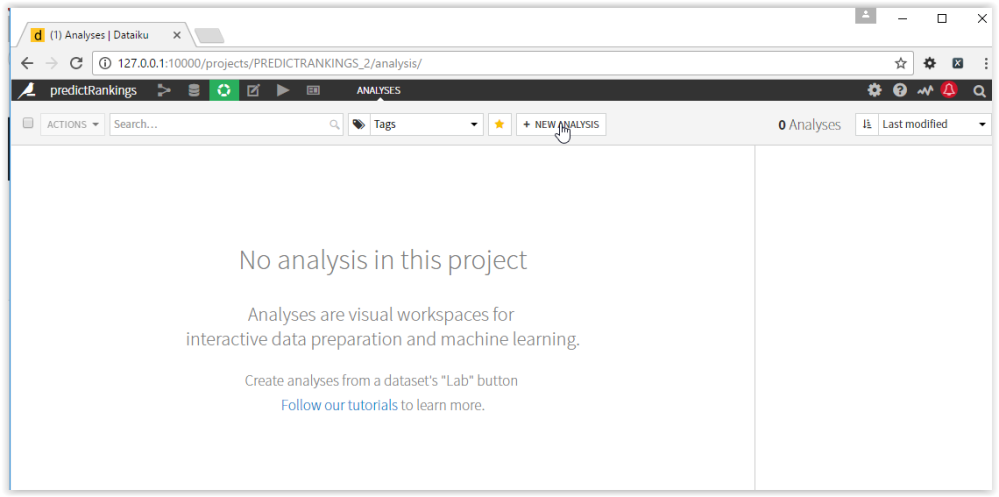

Click on the green wheel in the menu

Click on “New analysis” and choose the previous dataset and click on “Create Analysis.”

For two columns about Visiblis, you need to remove invalid rows for meaning Decimal by clicking on the “Visiblis_Title” column name and choose this function.

Choose “Reject” to ignore a feature and ensure your model only works with your pertinent data.

Be very patient (I recommend getting a coffee!), as XGboost is very efficient but still takes a while to run.

Dataiku has industrialised all the steps needed to produce the model: load train set, load test set, collect statistics, pre-process train set, pre-process test set, fit model, save model and score model. You can therefore save a lot of time by testing different algorithms at the same time and comparing their accuracy.

Step5: Check results

Be careful while analysing the results, because they are valid only for the specified dataset in the thematic, within the specified period. Google personalises search engine results pages with more than 300 factors, including localisation, device, language, thematic, etc.

In this example, you should be able to get a good idea of what is working effectively. I think it is a good approach to have the best results for a specific term/keyword and the worst term/keyword to give an ML algorithm the ability to either confirm or reject a feature.

Now you have established the accuracy of each algorithm, you can hopefully see the importance of the variables. Click on the link of your algorithm, and you access to a menu where you can discover your important variables and measure the performance of your algorithm with a selection of different methods (roc curve, confusion matrix, decision chart net etc.).

Going further

This is a good example of how you can import and manipulate a dataset quickly and use prediction algorithms with a single click, but it’s just the first step. If you want to test your prediction model on the new or updated page, you can follow this great tutorial: https://www.dataiku.com/learn/guide/tutorials/103-part2.html Furthermore, Dataiku allows you to code in R or Python, and makes it easy to share your whole workflow, by putting the source code in a zip file.

Developing together with an open-source approach

The Ranking Predictive Tool offers a potential gain of three to five days for SEO projects. It now takes just a few minutes to figure out the right strategy, which in an environment as competitive and fast-changing as SEO, is a significant advantage and a serious game-changer.

The SEO world’s fast-paced evolution is the main reason the Ranking Predictive Tool project was designed using open-source code. This way, the tool benefits from our community’s collective intelligence, so that everyone can benefit from its capabilities. This approach may appear unusual in SEO circles, but it’s fully in keeping with OVH’s wider mission to constantly evolve, and help our customers grow along with us.

My team and I are fortunate that OVH placed its trust in us to develop this tool internally, and are delighted with the initial feedback we’ve received. Since it was launched, the Ranking Predictive Tool project has been widely praised and copied, and was recently a nominee at the 2018 SEO Boost awards, which we’re very proud of. In fact, we are already working on a second version, which will take into account even more parameters and be accessible to even more people thanks to an API.

Leave a Reply