My first english post is about how to generate the internal link graph of any site with R Studio.

Process and Tools

You need RStudio with packages iGraph, SNA and Dplyr

To install a package , just type in the console : install.packages(“iGraph”)

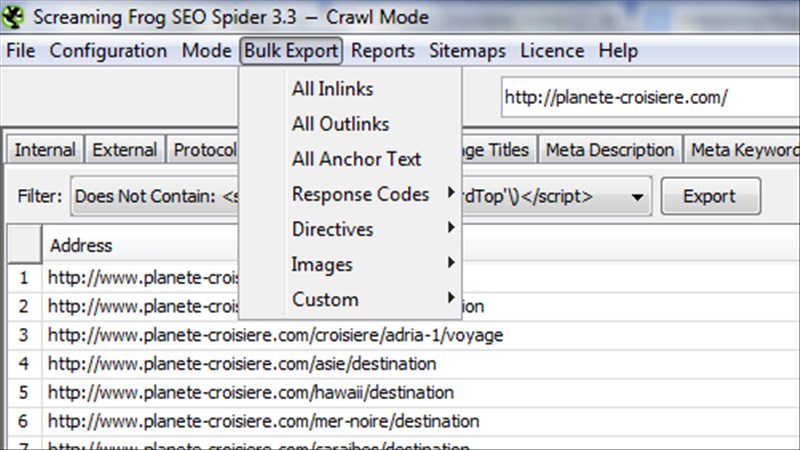

The process required exporting the URLs crawled with Screaming Frog.

When the crawl is finished, you choose “Bulk Export” then “All Outlinks”

Now, you are ready to use R Studio

R Code

You need to create a dataframe with the file generated by Screaming Frog.

# import semRush

## skip first line

DF <- read.csv2(file_csv, header=TRUE, sep = ",", stringsAsFactors = F, skip=1 )

## we keep only link

DF <- DF[DF$Type=="HREF",]

DF <- select(DF,Source,Destination)

DF <- as.data.frame(sapply(DF,gsub,pattern=website_url1,replacement=""))

DF <- as.data.frame(sapply(DF,gsub,pattern=website_url2,replacement=""))

DF <- as.data.frame(sapply(DF,gsub,pattern="\"",replacement=""))

## delete subdomain

DF <- subset(DF, !grepl("^http", DF$Source))

DF <- subset(DF, !grepl("^http", DF$Destination))

## adapt colnames and rownames

colnames(DF) <- c("From","To")

rownames(DF) <- NULL

# generate graph with data.frame

graphObject = graph.data.frame(DF, directed = TRUE)

# to run pagerank we need a simple, undirected graph

graphObject = simplify(as.undirected(graphObject))

Visualize the data

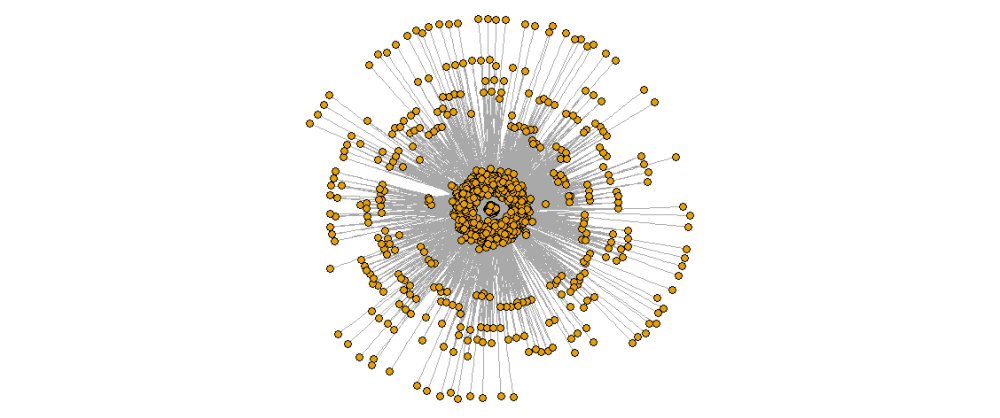

You can see the generation of graphs.

Be patient, it can take 5 minutes for a graph with 74 000 inlinks.

First we need to load a library with visualization capabilities. I’ll start with ‘igraph’, which is its own package.

library(igraph)

Note that igraph’s excellent documentation is accessible via the command ?igraph.

Now, we plot the simple graph

plot(graphObject,

layout=layout.fruchterman.reingold,

vertex.size = 4, # Smaller nodes

vertex.label = NA, # Set the labels

vertex.label.color = "black")

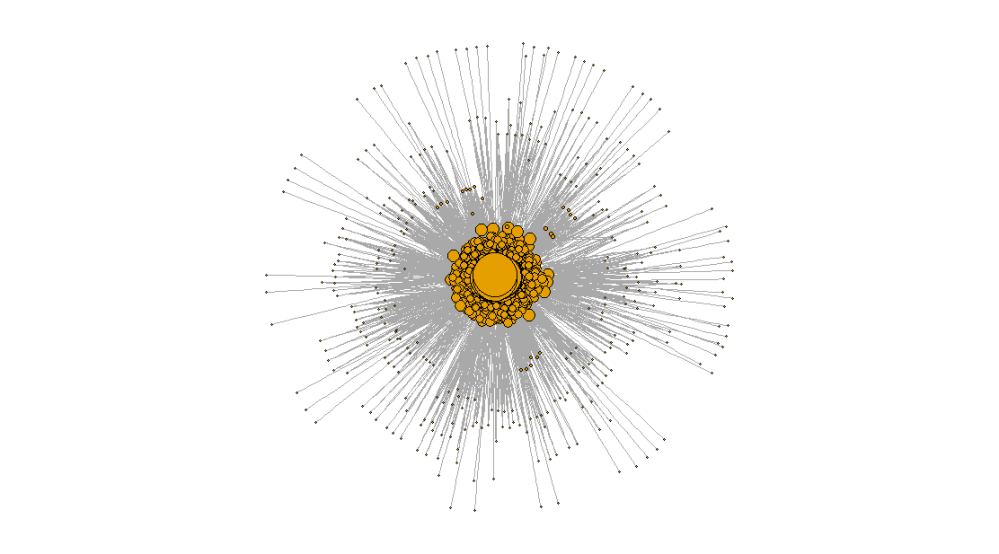

Now, I use Google Pagerank to adjust node sizes.

# calculate pagerank

pr <- page.rank(graphObject,directed=TRUE)

# print graph with size node linked with pagerank

plot(graphObject,

layout=layout.fruchterman.reingold,

vertex.size = map(pr$vector, c(1,20)),

vertex.label = NA,

vertex.label.color = "black",

edge.arrow.size=.2

)

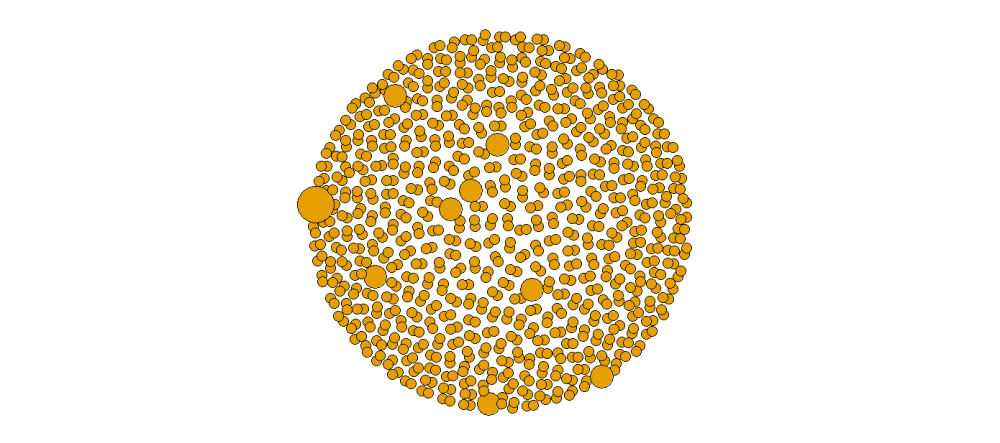

It is very easy to notice that pagerank is badly distributed and internnal linking is unbalanced.

Example with a good internal linking

A website with a good internal linking can be a spheroid because all is connected and whatever the first entry, Google Bots crawl and access different parts of your site.

Conclusion

This can be extremely helpful to better understand how a site is internally linked and detect areas of the sites (orphan pages) that are not connected with the rest.

If you want more information in order to improve your internal linking, I suggest you this post : http://www.wikiweb.com/internal-linking-structure/

Update 2016

You need this “map” function

map <- function(x, range = c(0,1), from.range=NA) {

if(any(is.na(from.range))) from.range <- range(x, na.rm=TRUE)

## check if all values are the same

if(!diff(from.range)) return(

matrix(mean(range), ncol=ncol(x), nrow=nrow(x),

dimnames = dimnames(x)))

## map to [0,1]

x <- (x-from.range[1])

x <- x/diff(from.range)

## handle single values

if(diff(from.range) == 0) x <- 0

## map from [0,1] to [range]

if (range[1]>range[2]) x <- 1-x

x <- x*(abs(diff(range))) + min(range)

x[x<min(range) | x>max(range)] <- NA

x

}

You haven’t defined website_url1 and website_url2.

Possible to expand on the article for someone new to R? In the first code block, for creating the dataframe, what information do I need to change? I assume on this line:

DF <- read.csv2(file_csv, header=TRUE, sep = ",", stringsAsFactors = F, skip=1 )

I want to change 'file_csv' to the path of my screaming frog csv? Also, you wrote "When the crawl is finished, you choose “Bulk Export” then “All Links” –– "All Links" is ambiguous. Do you mean outlinks?

Are there other things I'm supposed to change as well?

For your first question, replace “file_csv” by the path of your csv generated by ScreamingFrog.

Finally, you have right : you choose “All outlinks”

Vincent, Hi! Awesome work!! I’m struggling with one part of this. In the final section of your code, where you run the pagerank mapping I get the error:

Error in match.fun(f) :

‘pr$vector’ is not a function, character or symbol

I had to change the spelling of map to Map, otherwise I got “map” is not a function. After changing it to “Map” I get the above error. Can you help at all?

Hi Stephen, you are lucky because yesterday, I have published new article with my map function:

https://github.com/voltek62/SEO-Dashboard/blob/master/step3_computeInternalPageRank.R#L11

Source : http://data-seo.com/2016/06/05/dashboard-seo-part-2/

Ahh that’s great!!! I’ll check this out very soon! Thank you for sharing this. I’m very, very new to R but am fascinated already.

Small question: why do you export outlinks rather than inlinks to calculate the page rank?

Quoting from the original Google paper, PageRank is defined like this:

We assume page A has pages T1…Tn which point to it (i.e., are citations). The parameter d is a damping factor which can be set between 0 and 1. We usually set d to 0.85.

Also C(A) is defined as the number of links going out of page A. The PageRank of a page A is given as follows:

PR(A) = (1-d) + d (PR(T1)/C(T1) + … + PR(Tn)/C(Tn))

That’s correct – but you want to calculate the internal page rank. So why also take into account links that point to external domains. Inlinks report in screaming frog will only report internal links to uri’s so would be a more logical choice.

Yes, you have right but I have removed all external domains.